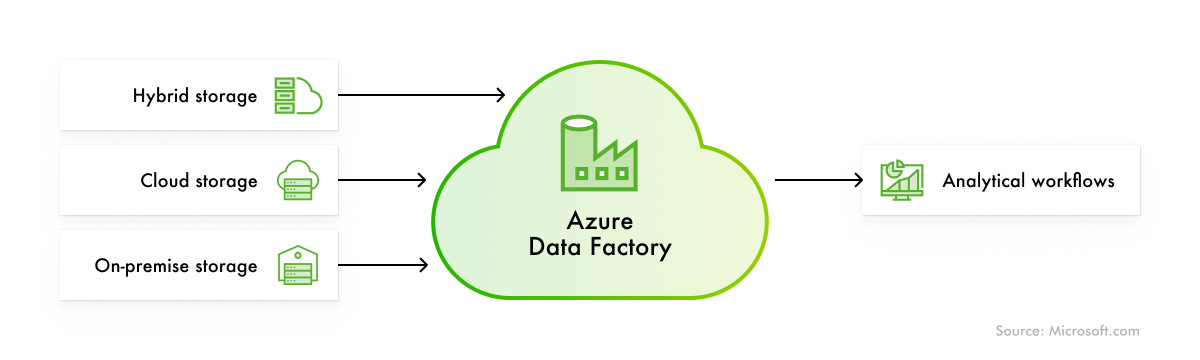

Azure Data Factory shines when organizations need to integrate, orchestrate, and manage data across multiple systems efficiently. Let’s explore the most common business scenarios where such ADF’s capabilities deliver clear value.

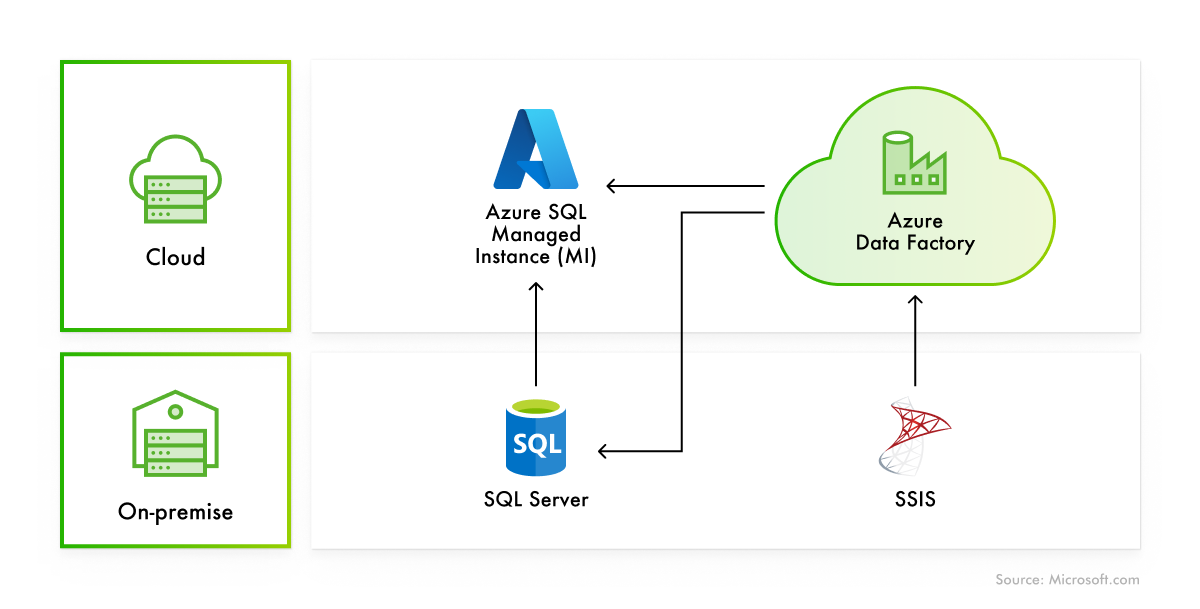

Enterprise data migration to the cloud

With its powerful data ingestion and integration capabilities, Azure Data Factory offers an effective solution for enterprises that need to securely and efficiently migrate large volumes of data from on-premises or legacy systems to the cloud. ADF works well even with environments with diverse infrastructures spanning on-premises, cloud, and hybrid deployments. The service can use its variety of connectors and integration runtimes to integrate with all data sources, ensuring unified data movement without disrupting ongoing operations.

Post-merger unification of data infrastructure

ADF is an efficient solution for unifying data assets of different companies after mergers and acquisitions. The service excels at integrating disparate systems, databases, and applications from different organizations into a single and consistent environment.

For instance, Leobit team helped a global e-commerce company build an efficient workflow for data orchestration and business intelligence using ADF’s integration and ingestion capabilities. Later, when the company was acquired by a larger enterprise, this workflow became a solid foundation for seamlessly unifying data assets between both organizations.

Advanced analytics and AI development

ADF serves as a foundation for AI and machine learning by helping to prepare clean, reliable, and well-labeled datasets. It also seamlessly integrates with Azure AI Services, such as Azure Machine Learning. Azure Data Factory effectively automates the collection and preparation of data, which makes it a valuable tool for designing data flows that feed and support ML models.

Unified reporting and business intelligence

Azure Data Factory allows organizations to eliminate data silos across departments and platforms. By consolidating multiple sources, such as marketing systems and CRMs, into a unified, well-structured data model, it supports consistent and comprehensive analytics using tools such as Power BI and Azure Synapse Analytics. In fact, it makes ADF a powerful foundation for implementing modern data-driven architectures like data fabric, an approach that connects all data across different platforms and locations, making it easier to access, manage, and analyze from one place. ADF can also become a fundamental tool in establishing data mesh, a data architecture where each team within the organization handles and shares its own data instead of having one central system.

Compliance and regulatory data management

ADF is an effective solution for industries subject to strict regulations, such as healthcare, where failure to protect patient data can result in penalties of up to $1,500,000 under HIPAA. The service provides efficient monitoring, logging, and governance capabilities that help data teams ensure that data movement, lineage, and access are fully traceable and secure.