AI-Powered Voice Assistant

Custom software development of an intelligent voice assistance solution

ABOUT the project

- Client:

- Leobit's Internal Project

- Location:

-

USA

- Company Size:

- 100+ Employees

- Industry:

-

Information Technology

- Solution:

- Custom Software

About

the project

A proof of concept (PoC) for a solution that combines a voice interface with a Voice RAG (Retrieval-Augmented Generation) to provide users with intelligent voice assistance. It analyzes voice queries and delivers audio responses in near real-time, using information from the company’s knowledge base.

Customer

It was Leobit’s internal project aimed at enhancing our customer communication and demonstrating our AI development capabilities.

Business Challenge

We needed to enhance the efficiency of our customer support team while seizing the opportunity to experiment with innovative AI technologies. As a result, our specialists decided to use the Voice RAG technology to provide an intelligent solution that integrates with our Leora voice assistant and provides quick audio responses to the customer’s voice queries.

Project

in detail

It took our team around 3 weeks to develop the solution’s PoC. The entire process can be roughly divided into three stages.

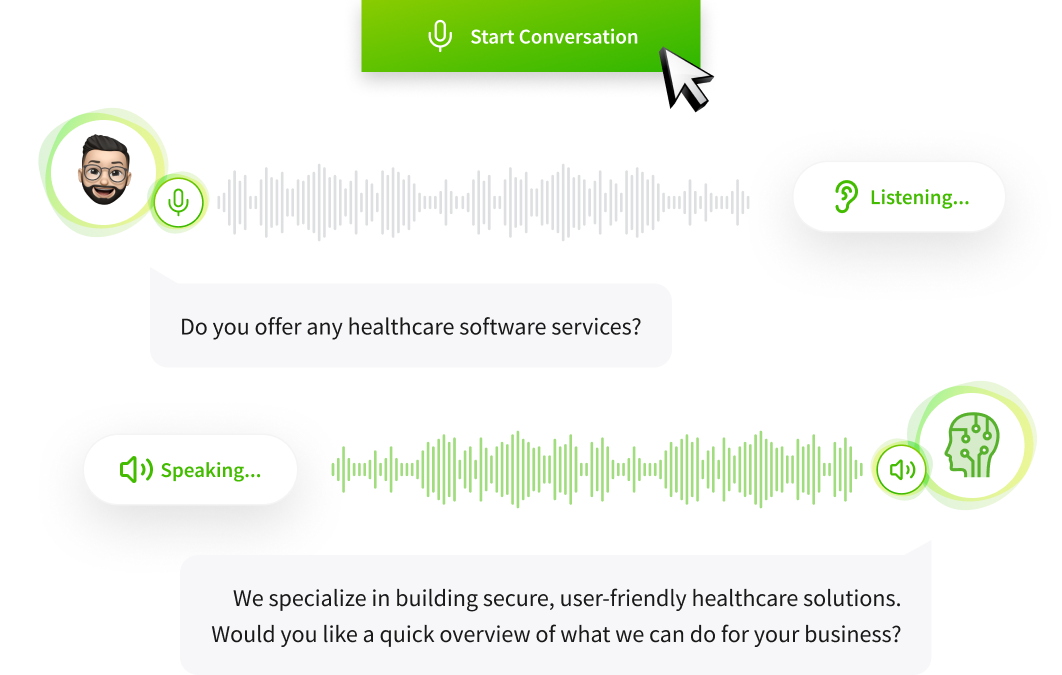

User-Friendly Voice Assistance

The tool provides informative responses with minimal user effort. The flow looks as follows:

- A user clicks the “Start Conversation” button and provides the tool with access to the microphone.

- The user speaks naturally and the system automatically detects when they have finished speaking.

- Within a few seconds, the user receives a voice response from Leora, as well as sees a text transcription of the query and the response on the screen.

- The user can continue asking questions within the same dialogue as the system maintains the context of the conversation.

Efficient Architecture for the Voice Assistance Workflow

We developed a convenient and flexible architecture where each part and service supports the voice assistance workflow. In particular:

- Web Audio API captures the microphone input, and the audio stream is transmitted through the WebSocket connection.

- RTMiddleTier receives audio through WebSocket and directly streams it to Azure OpenAI Real-Time API, which, in turn, processes it without speech-to-text conversion.

- Our custom script based on Azure Cognitive Search automatically performs a hybrid search (vector + full-text) and adds relevant documents from Azure Blob Storage to the context.

- GPT-4o processes the query with found context and generates a personalized response that is optimized for audio.

The generated audio streams back through WebSocket and is delivered to the user through the useAudioPlayer hook.

WebSocket also enables the tool to maintain stateful sessions by preserving conversation context and efficiently managing interruptions and reconnections.

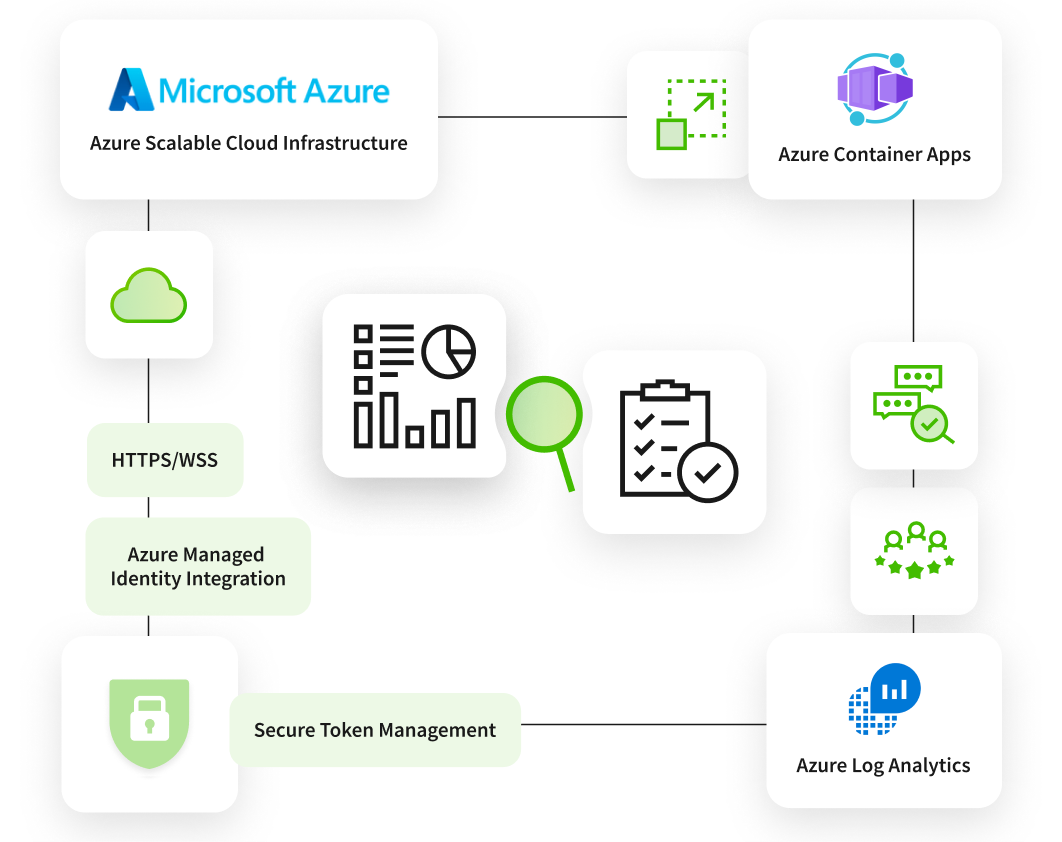

Functionality Enhanced with Azure Services

The tool efficiently uses Azure’s scalable cloud infrastructure to ensure efficient processing of queries and user responses. Various Azure services provide additional value, enhancing the solution’s performance and reliability.

For example:

- The solution offers functionality for collecting user feedback, tracking performance metrics, and managing logs through the integration with Azure Log Analytics.

- Services like Azure Managed Identity Integration, secure token management, and HTTPS/WSS-encrypted connections ensure the tool’s fortified security.

- Azure Container Apps hosting and its auto-scaling capabilities ensure the tool’s scalable deployment.

Explore The solution prototype

See a Voice-enabled RAG (Retrieval Augmented Generation) assistant built with React and Azure OpenAI Real-Time API, deployed via Azure Container Apps. It streams voice-in/voice-out interactions with low latency, retrieves contextual data via Azure Cognitive Search, and stores source documents in Azure Blob Storage.

Technology Solution

- Flexible cloud architecture based on Azure services and integrations with Azure AI services

- Fortified security and infrastructure control with Azure Managed Identity and Azure Log Analytics

- Response initiated within 500ms, which is achieved through the use of WebSocket

Value Delivered

- Automated customer support through voice assistance

- Multi-language support with automatic detection (English, Ukrainian, Arabic, Polish, German, French, Spanish, Italian, etc.)

- Improved information accessibility through a natural voice interface

- Demonstration of Leobit’s technical expertise with AI tools